Understanding AI and How It Works

Artificial Intelligence (AI) has rapidly transformed from a specialized technology to an everyday presence in our children's digital lives. From social media feeds to homework help tools, voice assistants to video games, AI systems are increasingly shaping how children interact with information and media.

lightbulb The AI Learning Opportunity

While AI offers tremendous benefits, it also presents unique challenges that can become powerful learning opportunities for families. The clear contrast between AI-generated content and reality creates teachable moments that can strengthen critical thinking skills. When families learn to identify and verify information together, these challenges become gateways to deeper understanding rather than threats.

This article approaches AI literacy as an empowering family activity—a chance to explore together, ask questions, and build skills that will serve children throughout their lives. Rather than fearing AI's limitations, we can use them as tools to sharpen our children's ability to evaluate all types of information they'll encounter in the digital world.

How Modern AI Systems Work

AI systems are trained on massive datasets of text, images, and other content scraped from the internet, books, and other sources—including both accurate and inaccurate information.

The AI learns to recognize patterns and relationships between concepts, but doesn't actually "understand" meaning or have the ability to verify factual accuracy.

When prompted, AI produces content that statistically resembles the patterns it observed during training—creating text, images, or audio that seems human-created but often contains factual errors or "hallucinations."

AI systems present information with a tone of authority regardless of accuracy, making it difficult for users (especially children) to distinguish between fact and fiction.

This basic understanding is crucial because AI fundamentally differs from traditional information sources. Search engines like Google primarily index existing content, while AI systems can generate entirely new content that appears authentic but may be misleading or fabricated.

- 77.1% of 13- to 18-year-olds reported using generative AI in 2024, up from 37.1% in 2023

- 86% of students use AI in their studies, with 54% using it daily or weekly

- 73% of teens have used at least one type of generative AI tool, most often for homework help

- 26% of teens aged 13-17 reported using ChatGPT for schoolwork in 2024, up from 13% in 2023

Source: National Literacy Trust, 2024

Source: Campus Technology, 2024

Source: Axios, 2024

Source: Pew Research, 2025

The Trust Problem: Why AI Lies

While it might seem strange to describe AI systems as "lying," they regularly produce false information with a tone of complete confidence. Understanding why this happens is essential for protecting children in the AI era.

AI Hallucinations: What They Are and Why They Happen

"Hallucination" is the technical term for when AI systems generate information that sounds plausible but is factually incorrect or entirely fabricated. These aren't random errors—they arise from fundamental aspects of how AI works:

- Pattern completion over fact-checking: AI systems are designed to complete patterns in a statistically likely way, not to verify information

- Training on flawed data: If inaccurate information exists in training data, AI will replicate and amplify those errors

- No true understanding: AI lacks genuine comprehension of meaning, context, or causality

- No access to current information: Most AI systems have a "knowledge cutoff" and can't access real-time facts

- Confidence without accuracy: AI presents false information with the same authoritative tone as factual information

Research estimates that chatbots may hallucinate as much as 27% of the time, with factual errors present in 46% of generated texts.

Source: Wikipedia/AI Hallucination, 2024

Example: AI Hallucination in Response to a Child's Query

When asked "Who was the first person to climb Mount Everest underwater?", an AI system responded:

The entire response is a fabrication. Mount Everest cannot be climbed underwater, Jacques Cousteau never attempted such a feat, and the book and documentary mentioned do not exist. Yet the AI presented this information with complete confidence.

The Trust Challenge for Children

Children are particularly vulnerable to AI misinformation for several developmental reasons:

- Developing critical thinking skills: Children are still learning to evaluate information sources

- Authority bias: Children are taught to trust authoritative-sounding information

- Digital nativity: Many children view technology as inherently trustworthy

- Appealing presentation: AI content is often presented in an engaging, personalized way that children find compelling

- Limited background knowledge: Children have fewer reference points to identify inaccuracies

A study by researchers at the University of Kansas found that parents seeking health information for their children trusted AI-generated content more than content from healthcare professionals when the source was unknown, rating AI-generated text as credible, moral and trustworthy.

Source: University of Kansas, 2024

Children learn that newspapers, books, and documentaries have human authors who can make mistakes, have opinions, and may be biased.

Children learn these tools help find existing information, with results coming from specific websites that can be evaluated.

Children understand that peers may be misinformed or joke, while teachers and parents are more reliable sources.

Children often perceive AI as having access to all possible information, making AI tools seem more reliable than human sources.

AI doesn't clearly cite sources, making it difficult for children to trace information or evaluate its reliability.

The friendly, conversational nature of AI leads children to develop a parasocial relationship that increases trust.

Deepfakes and Image Generation Risks

Beyond text-based misinformation, AI systems can now create highly convincing visual and audio content—commonly known as "deepfakes." These technologies present particular challenges for children's information literacy.

Understanding Visual AI Manipulation

Modern AI can generate or manipulate images and videos in several ways:

- Creating realistic images from text descriptions (e.g., DALL-E, Midjourney, Stable Diffusion)

- Generating fake photographs of non-existent people (e.g., ThisPersonDoesNotExist)

- Manipulating existing videos to make people appear to say or do things they never did (deepfakes)

- Creating synthetic voices that sound like real people (voice cloning)

While these technologies have legitimate creative applications, they also create new avenues for deception that children may struggle to identify.

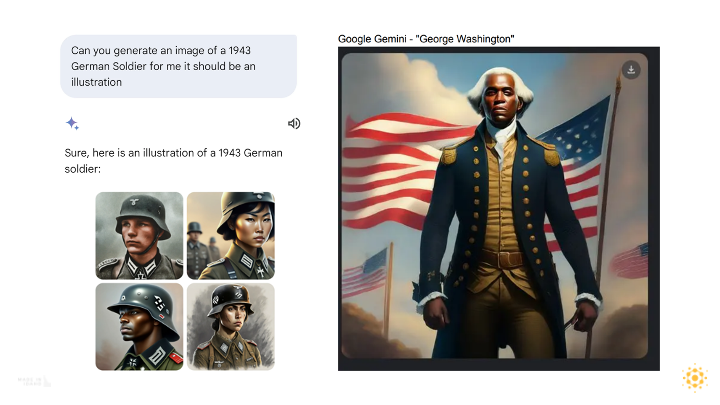

AI Hallucinations in Visual Content

A particularly concerning development is how AI image generators can create completely fabricated "historical" content that appears authentic but presents entirely false information—especially problematic for children learning about history.

Example: AI Generation of Historical Figures

Google Gemini's AI generated these images of George Washington as a Black man when asked to show what he looked like—a complete historical fabrication presented with confidence.

This example shows how AI systems, in their attempt to increase "fairness and representation" (as stated by some AI companies), can generate content that completely rewrites history. Children with limited background knowledge may have no way to determine that these images are fabrications.

"AI systems should be looked at with maximum skepticism and considered to be fraudulent by default. This is how we should teach our children about AI. If we're going to expose them to these systems, that's how we should provide context for what these things are."

— Ben Gillenwater, Family IT GuyWhat makes this particularly dangerous is that children in middle and elementary schools who are just learning history may accept this misinformation as fact, creating a fundamentally distorted understanding of reality that shapes their worldview.

- Deepfake and AI-generated content is becoming more common (increasing tenfold between 2022 and 2023), creating more opportunities to practice detection skills

- When families discuss these topics openly, children develop stronger protective factors against potential harm from synthetic media

- This rapid evolution in technology (five-fold increase in synthetic media from 2022-2023) provides rich opportunities for parent-child discussions about trust and verification in digital spaces

Source: Security.org, 2024

Source: Thorn Research, 2024

Source: SEI CMU, 2024

The "Seeing Is Believing" Challenge

Humans are evolutionarily primed to trust visual evidence—a tendency that's particularly strong in children. While previous generations were taught "the camera doesn't lie," today's children must learn the opposite: visual content can be entirely fabricated.

This represents a fundamental shift in information literacy. Research shows that human detection of deepfake images is only about 50% accurate, meaning we're essentially guessing when trying to identify AI-generated images.

Source: Security.org, 2024

"Oxford Internet Institute researchers have called for a 'critical re-evaluation' of how we study the impact of internet-based technologies on young people's mental health, emphasizing the need for improved research on digital harms in youth."

Oxford Internet Institute, 2024Source

Social and Emotional Impacts

Beyond the challenge of identifying fake content, AI-generated media can have significant emotional and social impacts on children:

- Bullying amplification: Deepfakes can be used to create embarrassing or harmful fake images of peers

- Identity confusion: AI can generate content that appears to come from friends, causing social confusion

- Reality distortion: Repeated exposure to synthetic media can lead to a general distrust of authentic content

- Self-image distortion: AI-generated "perfect" images contribute to unrealistic beauty standards and body image issues

Teaching Critical Thinking in the AI Age

Given these challenges, parents must take an active role in developing children's AI literacy. This means teaching specific skills for identifying, questioning, and verifying AI-generated content.

Stanford Teaching Commons provides a framework for AI literacy that identifies the skills and knowledge students need to navigate the opportunities and challenges of generative AI.

Source: Stanford Teaching Commons, 2024

Core Critical Thinking Questions for the AI Age

help_outline Where did this information come from?

Teach children to identify the source of information and understand the difference between content created by humans, indexed by search engines, or generated by AI.

Practice exercise: When encountering information online, have your child identify whether it came from a specific person or organization, or if it was generated by AI without clear attribution.

help_outline Can this be verified with other sources?

Encourage cross-checking information across multiple reliable sources rather than relying on a single output from an AI system.

Practice exercise: When using AI for homework help, have your child verify key facts using traditional reference sources like textbooks or established educational websites.

help_outline Does this information seem too perfect or convenient?

AI often creates unnaturally comprehensive or perfectly structured information. Teach children that real information is sometimes messy, incomplete, or contains caveats.

Practice exercise: Compare AI-generated responses to human-written articles on the same topic, noting differences in structure, uncertainty language, and nuance.

help_outline Are there unusual details or inconsistencies?

AI hallucinations often include specific but fabricated details (like non-existent books, events, or statistics) that can be detected through careful examination.

Practice exercise: Fact-check specific claims or cited works in AI-generated content to see if they actually exist.

help_outline How does this make me feel or what does it want me to believe?

AI content (especially that created with malicious intent) may attempt to manipulate emotions or reinforce certain viewpoints. Teaching emotional awareness is a key part of critical evaluation.

Practice exercise: Have your child identify what emotions a piece of content evokes and whether those emotions might influence their acceptance of the information.

Age-Appropriate Approaches to AI Literacy

Critical thinking skills should be adapted based on your child's developmental stage:

For Ages 6-9:

- Introduce the concept that computers can create stories and pictures that aren't real

- Use simple analogies: "AI is like a parrot that repeats things it's heard, not like a teacher who knows facts"

- Establish a habit of asking "How do we know this is true?" when encountering new information

- Practice identifying AI-generated content together using obvious examples

Activity: Real vs. AI Image Matching Game

A simple activity to help young children understand AI-generated images:

This hands-on activity helps young children develop early awareness of AI-generated content and the importance of verifying information.

For Ages 10-13:

- Explain how AI works using appropriate simplifications

- Teach basic fact-checking habits and introduce reliable reference sources

- Discuss why people might create or share fake content

- Practice identifying subtle signs of AI generation in text and images

- Role-play scenarios involving potential AI misinformation

Activity: Historical Fact-Checking Challenge

A collaborative activity that teaches middle schoolers to verify information:

This activity builds both research skills and critical thinking, teaching pre-teens that verification is an essential part of using AI tools responsibly.

For Ages 14+:

- Engage in deeper discussions about how AI systems are trained and their limitations

- Teach advanced verification techniques, including reverse image searches and source triangulation

- Discuss the ethical implications of AI-generated content and deepfakes

- Encourage a healthy skepticism without fostering excessive cynicism

- Explore how AI might be used to spread misinformation or manipulate emotions

Activity: Deepfake Detection Workshop

An advanced exercise for teens to develop sophisticated media literacy skills:

This workshop builds advanced technical skills while encouraging ethical thinking about the social implications of synthetic media. It prepares teens to be responsible digital citizens in an increasingly complex media landscape.

Little Language Models, developed by MIT Media Lab PhD researchers, aims to help children (ages 8-16) explore and engage with foundational ideas underlying Generative AI systems in playful and personally meaningful ways.

Source: MIT Media Lab, 2024

Practical Protection Strategies

Beyond developing critical thinking skills, there are practical steps parents can take to protect children from AI-related risks:

lightbulb Essential Protection Strategies

- Use AI safety tools designed specifically for children, such as kidSAFE-certified AI education platforms

- Enable content filters on AI tools when available, understanding they are helpful but not foolproof

- Establish clear rules for when and how AI tools can be used for school work

- Monitor AI interactions just as you would other online activities, especially for younger children

- Choose age-appropriate AI systems designed with educational rather than general-purpose goals

- Model healthy skepticism by verbalizing your own evaluation process when using AI tools

- Report problematic AI content to platform providers and, when appropriate, to educators or authorities

Navigating AI for Homework and Learning

Many children now use AI tools for academic work, creating both opportunities and risks. Consider establishing these family guidelines:

- Verification requirement: Any factual information from AI must be verified through at least one reliable source

- Transparency rule: When AI assistance is used for schoolwork, it should be disclosed to teachers according to school policies

- Learning primacy: Use AI as a tool for understanding, not as a substitute for learning

- Critical interaction: Teach children to question and challenge AI outputs rather than passively accepting them

- Ethical boundaries: Establish clear guidelines about acceptable uses (idea generation, editing) versus unacceptable uses (plagiarism, cheating)

A quarter of U.S. teachers say AI tools do more harm than good in K-12 education, highlighting the importance of proper guidance for children using these tools.

Source: Pew Research, 2024

Modeling Critical Evaluation of AI Content

When your child brings AI-generated information to you, model critical evaluation by thinking aloud:

This process demonstrates both healthy skepticism and the verification methods that build strong information literacy.

Preparing Children for an AI-Saturated Future

AI technology is advancing rapidly, with capabilities expanding and accessibility increasing. Rather than attempting to shield children entirely from these tools, the most effective approach is to prepare them to engage critically and safely.

The skills that protect children from AI misinformation—critical thinking, source evaluation, emotional awareness, and healthy skepticism—are valuable not just for technology use but for life in general. By teaching these abilities, we help children navigate not only today's AI landscape but whatever new technologies emerge in their future.

Stanford Digital Education is creating an AI curriculum for high schools, combining lesson plans with Google's 'AI Essentials' course to help students better understand how AI works and its implications.

Source: Stanford Digital Education, 2024

Oxford researchers have identified key challenges in AI ethics for children including lack of consideration for developmental needs, minimal inclusion of guardians' roles, few child-centered evaluations, and absence of coordinated ethical AI principles for children.

Source: University of Oxford, 2024

The Growing Problem in Education

The educational implications of AI misinformation are substantial and growing. As students increasingly rely on AI tools for homework help and research, schools and parents face a new challenge: teaching children to distinguish between accurate historical information and AI-generated falsehoods.

The hands-on activities we've outlined throughout this article provide practical, age-appropriate ways to address this challenge:

- Ages 6-9: The Real vs. AI Image Matching Game helps younger children develop visual literacy by comparing authentic historical portraits with AI-generated alternatives

- Ages 10-13: The Historical Fact-Checking Challenge teaches middle schoolers to verify information using reliable sources and recognize when AI gets facts wrong

- Ages 14+: The Deepfake Detection Workshop gives teens advanced technical skills to analyze media and understand the broader social implications

By making these learning experiences interactive and age-appropriate, we help children develop the critical skills they need in the classroom while turning AI's limitations into valuable teaching opportunities.

Real-world examples of AI misinformation causing harm are already emerging. In one documented case, a family was poisoned after using a foraging guide book that was written by AI and contained dangerous inaccuracies about edible mushrooms. These incidents highlight why AI outputs should be treated with extreme caution, especially when children are the consumers of this information.

"AI systems present us with an incredible teaching opportunity. Their clear limitations and occasional errors create perfect moments to practice critical thinking with our children. When we explore AI's strengths and weaknesses together, we're not just teaching about technology—we're building fundamental skills that apply to all information sources."

— Ben Gillenwater, Family IT GuyParents who approach AI literacy as an ongoing conversation rather than a one-time warning will best prepare their children for a world where the line between human and machine-generated content continues to blur. By fostering a curious but skeptical mindset, we can help children benefit from AI's potential while avoiding its pitfalls.